Keeping aging systems on their feet is a daunting and resource-intensive task. The U.S. Air Force, for example, continually wages an internal battle to keep its weapons systems in fighting form. One enormous and often overlooked factor contributing to the early demise of military technologies is the problem of unavailable parts. Take the B-2 Spirit, a stealth bomber that first flew in 1989: by 1996, significant components of the aircraft’s defensive management system, just one small part of its electronics, were obsolete. Repairing the system entailed either redesigning a few circuit boards and replacing other obsolete integrated circuits for US $21 million, as the B-2 program officers chose to do, or spending $54 million to have the original contractor replace the whole system. The electronics, in essence, were fine—they just couldn’t easily be fixed if even the slightest thing went wrong.

Although mundane in its simplicity, the inevitable depletion of crucial components as systems age has sweeping, potentially life-threatening consequences. At the very least, the quest for an obsolete part can escalate into an unexpected, budget-busting expense. Electronics obsolescence—also known as DMSMS, for diminishing manufacturing sources and material shortages—is a huge problem for designers who build systems that must last longer than the next cycle of technology. For instance, by the time the U.S. Navy began installing a new sonar system in surface ships in 2002, more than 70 percent of the system’s electronic parts were no longer being made. And it’s not just the military: commercial airplanes, communications systems, and amusement-park rides must all be designed around this problem, or the failure of one obsolete electronic part can easily balloon into a much larger system failure.

Call it the dark side of Moore’s Law: poor planning causes companies to spend progressively more to deal with aging systems

The crux is that semiconductor manufacturers mainly answer the needs of the consumer electronics industry, whose products are rarely supported for more than four years. Dell lists notebook computer models in its catalog for about 18 months. This dynamic hurts designers with long lead times on products with even longer field lives, introducing materials, components, and processes that are incompatible with older ones.

The defining characteristic of an obsolete system is that its design must be changed or updated merely to keep the system in use. Qinetiq Technology Extension Corp., in Norco, Calif., a company that provides obsolescence-related resources, estimates that approximately 3 percent of the global pool of electronic components becomes obsolete each month. PCNAlert, a commercial service that disseminates notices from manufacturers that are about to discontinue or alter a product, reports receiving about 50 discontinuance alerts a day. In my capacity as an associate professor of mechanical engineering at the University of Maryland, College Park, and a member of the university’s Center for Advanced Life Cycle Engineering, I have been developing tools to forecast and resolve obsolescence problems. To deal with that growing pile of unavailable supplies, engineers in charge of long-lasting systems must basically predict the future—they must learn to plan well in advance, and more carefully than ever before, for the day their equipment will start to fail.

The systems hit hardest by obsolescence are the ones that must perform nearly flawlessly. Technologies for mass transit, medicine, the military, air-traffic control, and power-grid management, to name a few, require long design and testing cycles, so they cannot go into operation soon after they are conceived. Because they are so costly, they can return the investment only if they are allowed to operate for a long time, often 20 years or more. Indeed, by 2020, the U.S. Air Force projects that the average age of its aircraft will exceed 30 years—although some of the electronics will no doubt have been replaced by then.

Some of the best examples of obsolescence come from the U.S. military, because it has been managing long-cycle technology programs longer than just about any other organization in the world. But there are also commercial aircraft that fall into the same, almost incredible age range of 40 to 90 years. The Boeing 737 was introduced in 1965 and the 747 in 1969; neither is expected to retire anytime soon.

When those systems were first built, the problem of obsolete electronics was only vaguely addressed, if at all, because the military still ruled the electronics market, and the integrated circuits they needed remained available for much longer than they do now. In the 1960s, the expected market availability for chips was between 20 and 25 years; now it’s between two and five. Call it the dark side of Moore’s Law, which states that the number of transistors on a chip doubles every 18 to 20 months: poor planning for parts obsolescence causes companies and militaries to spend progressively more to deal with the effects of aging systems—which leaves even less money for new investment, in effect creating a downward spiral of maintenance costs and delayed upgrades.

The situation is a lot like owning a car, which sooner or later begins to show its wear. First the brakes may need to be replaced, say, for $1000. Then it’s the transmission—$2000. Pretty soon you start wishing you’d just bought a new car, except that the money you’ve sunk into maintaining the old car makes it likely that you won’t be able to afford a new one.

The Defense Department spends an estimated $10 billion a year managing and mitigating electronic-part obsolescence. In some cases, obsolescence can trigger the premature overhaul of a system. The F-16 program, for example, spent $500 million to redesign an obsolete radar. In the commercial world, telecommunications companies spend lots of money managing obsolescence in infrastructure products, such as emergency-response telephone systems. One way to deal with it is to replace a failed device with a wholly redesigned one; another is to stockpile warehouses full of parts to cover the projected lifetime of a system. Both options cost money that might have otherwise been spent on expanding the business.

Obsolescence also isn’t limited to hardware. Obsolete software can be just as problematic, and frequently the two go hand in hand. For example, an obsolescence analysis of a GPS radio for a U.S. Army helicopter found that a hardware change that required revising even a single line of code would result in a $2.5 million expense before the helicopter could be deemed safe for flight.

There is a way out of the obsolescence mess, but first we need to understand how systems became so entangled in the first place. In the United States, electronic-part obsolescence began to emerge as a distinct problem in the 1980s, with the end of the Cold War. To save money and to open up the military to the more advanced components developed by the commercial world, the Pentagon began relying much less on custom-made “mil-spec” (short for military-specification) parts, which are held to more stringent performance requirements than commercial products. This policy, called acquisition reform, affected many nonmilitary applications as well, such as commercial avionics and oil-well drilling and some telecommunications products, which had historically depended on mil-spec parts because they were produced over long periods of time. Now at least 90 percent of the components in military communications systems are commercial off-the-shelf products, and even in weapons systems the figure comes to 20 percent, mostly in the network interface. The vast majority of the memory chips and processors in military systems come from commercial sources.

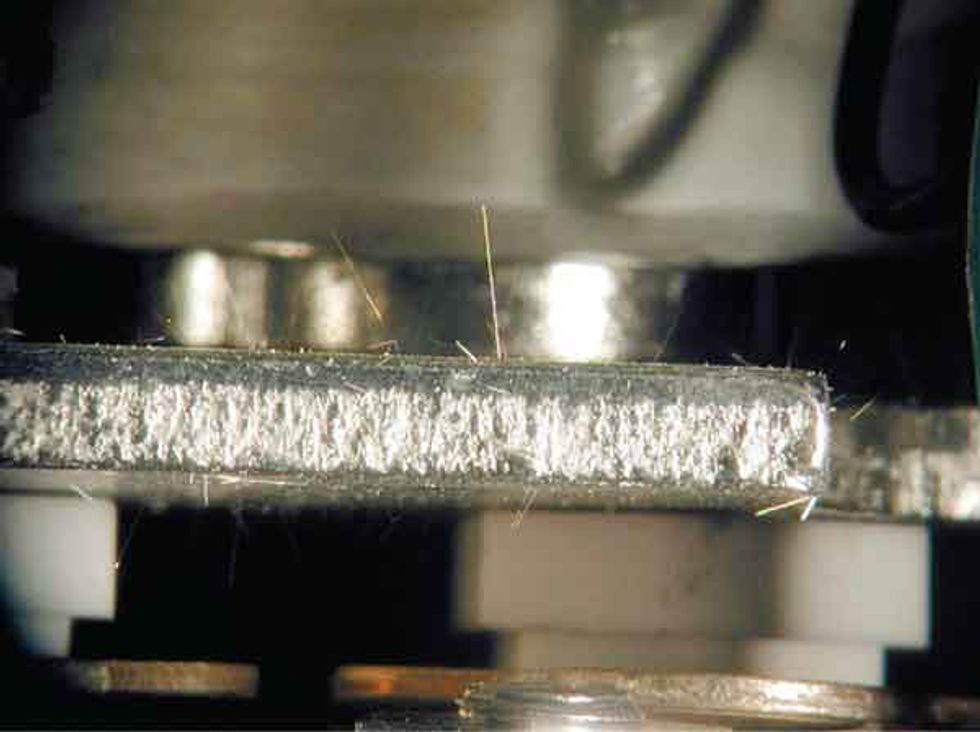

A European Union–driven ban on the use of lead in electronic components that took effect in July 2006 has exacerbated the situation. Although military, avionics, and most other long-lasting systems are actually exempt from the directive, those exemptions amount to little. Consumer electronics makers aiming their products at international markets comply with the ban—which in turn pushes the IC manufacturers to remove all lead from their parts. The main consequence of the ban is that traditional solder, which contained lead, had to be replaced with a lead-free alloy that was sufficiently cheap and also had the attractive mechanical, thermal, and electric properties of lead. Many such alloys, however, tend to sprout tiny “whiskers” over time, potentially causing short-circuiting. In 2005, for example, a nuclear reactor at the Millstone Power Station, in Connecticut, was shut down because some of its diodes had malfunctioned after forming whiskers, and in 2000 a $200 million Boeing satellite was declared a total loss after whiskers sprouted on a space-control processor. Both the unavailability of lead-based parts and the unreliability of some of their replacements are very real issues that plague longer-lasting systems.

Sneaky Splinters: Tiny conductive whiskers grow within an electromagnetic relay. Systems can fail if whiskers cause short-circuiting or arcing.

Photo: NASA Electronic Parts and Packaging Program

The absence of crucial parts now fuels a multibillion-dollar industry of obsolescence forecasting, reverse-engineering outfits, foundries, and unfortunately, a thriving market of counterfeits. Without advance planning, only the most expensive or risky options for dealing with obsolescence tend to remain open. The most straightforward solution—looking for a replacement part from a different manufacturer or finding it on eBay—faces substantial hurdles. For example, a part purchased from an unapproved vendor can involve costly and time-consuming “requalification” testing to determine that the replacement is entirely reliable and not counterfeit—a possibility that at the very least poses serious risks. [See “Bogus!,” IEEE Spectrum, May 2006.]

Then there’s the not-so-small matter of finding the right parts, which may exist in sufficient supply but be nearly impossible to track down. John Becker, former head of obsolescence planning for the Defense Department, tells me that the Federal Logistics Information System databases encode parts made by 3M in 64 different formats. That includes small differences in the way the company’s name appears, like 3M versus 3 M or MMM, and so on. And that number doesn’t even count the Air Force, Army, and Navy databases, nor all the ways that Lockheed Martin, Boeing, or other contractors might keep track of 3M’s parts. A standardized method for encoding names and serial numbers would eliminate a lot of obsolescence cases by making it easier to track down the original parts, close substitutes, or upgraded versions.

For truly critical cases, procurement officers can turn to aftermarket manufacturers that are authorized by companies like Intel and Texas Instruments to resurrect their discontinued integrated circuits. An original manufacturer might give Lansdale Semiconductor or Rochester Electronics uncut wafers, which can later be finished on demand. Although reliable, this custom-assembly approach costs about $54 000 (in 2006 dollars) for a production run that might yield as few as 50 units of one integrated circuit, according to a report by ARINC, an operations consulting firm. (Rochester disputes this figure, saying it can resurrect a TI wafer for between $5000 and $20 000.)

Another option is to redesign or reverse-engineer the part, but that could take 18 months. If the system in question is critical, a year and a half is simply too long. The ARINC analyses indicate that a redesign can run between $100 000 and $600 000, and even that may be conservative.

To the Boneyard: Eventually, obsolete subsystems overwhelm even the hardiest aircraft, dooming them to retirement in desert corrals.

Photo: Matthew Clark

The most common plan—when a company or defense program has a plan—is to wait until a supplier announces the end of a product’s manufacturing cycle and then place a final order, hoarding the extra parts the company expects it will need in order to support the product throughout its lifetime. But such lifetime purchases can turn out to be trickier than one might expect.

First, not all manufacturers send out their alerts enough in advance to allow their customers time to request a final factory run. The Government-Industry Data Exchange Program, or GIDEP, estimates that it receives about 50 to 75 percent of the obsolescence notices that are relevant to the Defense Department. That does not mean that all those alerts reach program managers before the product has been discontinued, despite the industry standard of 90 days’ notice.

Second, it can be hard to know how many parts to stockpile. For inexpensive parts, lifetime buys are likely to be well in excess of forecasted demand, because a manufacturer may set a minimum purchase amount. Consider one major telecommunications company (which wishes to remain unnamed for competitive reasons) that typically buys enough parts to fulfill its anticipated lifetime needs every time a component becomes obsolete. Currently, the company holds an inventory of more than $100 million in obsolete electronics, some of which will not be used for a decade, if ever. In the meantime, parts can be lost, degrade with age, or get pilfered by another product group—all scenarios that routinely undermine even the best intentions of project managers.

The answer, then, involves more than removing bureaucratic hurdles. Better databases don’t obviate the somewhat spontaneous, panic-driven responses that program managers can feel forced to make when they see that a part’s production is about to cease. Ultimately, only a focus on strategies that try to predict the future can offer dramatic improvements to product managers facing component obsolescence.

Such companies as i2 Technologies, Qinetiq, Total Parts Plus, and PartMiner have produced commercial tools that forecast obsolescence by modeling a part’s life cycle. To derive a forecast, the services weigh a product’s technical attributes—for example, minimum feature size, logic family, number of gates, type of substrate, and type of process—to rank parts by their stages of maturity, from introduction through growth, maturity, decline, phase out, and obsolescence. At the Center for Advanced Life Cycle Engineering, my colleagues and I have added another dimension to the prediction, by mining commercial vendors’ parts databases for specific market data, including peak sales years and last order dates.

Seed Vault: Ten billion obsolete dies sit in a wafer bank at Rochester Electronics.

Photo: Bob O’Connor

Different factors drive the obsolescence of different electronic parts, and life-cycle modeling must be tailored to the type of part. Monolithic flash memory is one simple case: it turns out that successive generations of memory have fairly predictable peak sales years that correlate closely to an increase in size. By plotting this relationship, we see that one key attribute—the number of megabits—drives the obsolescence of monolithic flash memory. I analyzed historical data from different manufacturers to see when they required final orders for a given flash-memory product. (After a final order, a product is considered obsolete, even though it may still be available through aftermarket sources for some time.) To predict when a part type is likely to become obsolete, I used a manufacturer’s last order dates, expressed as a number of standard deviations after the peak sales year, to make a histogram for different versions of the product. For each size of a particular Atmel flash-memory chip, it turned out that the last order dates, on average, came 0.88 standard deviations past the peak sales year.

For other components, however, the key attribute driving obsolescence may be less clear. Take the obsolescence of an operational amplifier. No single attribute drives its obsolescence, so I turned again to data mining and plotted 2400 data points from seven manufacturers to find the number of years a part could be procured from its original manufacturer. Operational amplifiers introduced in 1994 could be procured for eight years; in 2004, that availability had dropped to less than two years.

However, predicting when parts will become unavailable is still not enough information on which to build a business plan. A product manager also needs to know what to do once that date arrives. For this we turn to refresh planning. The goal of refresh planning is to find the best date to upgrade a product and to identify the system components on which the redesign should focus. I have developed one such methodology, called mitigation of obsolescence cost analysis, or MOCA, which determines when a design refresh should occur, what the new design should accomplish, and how to manage the parts that go obsolete before that time.

The software starts with a system’s bill of materials. For each component—be it chips, circuit boards, or even software applications—we estimate the dates by which we expect them to become obsolete and the costs of procuring them before that time. Based on those estimates, MOCA determines a timeline of all possible design refresh dates, in which a design refresh is primarily intended to preserve, rather than improve, a system’s functionality. (For many long-life systems, this is all a manager really wants.) The tool comes up with a set of possible design plans, starting with zero design refreshes—meaning that the best strategy is to be reactive, for example by finding substitute parts or reverse-engineering—up to and including the plan that entails a refresh for every component that is expected to go obsolete. MOCA takes into account uncertainty as well, by assigning probabilities to each projected obsolescence event.

The results are not easy to generalize and depend heavily on the peculiarities of each system, which underscores why planning around obsolescence can be so difficult. A MOCA study from 2000 on the engine controller for a regional jet, for example, found that the optimum solution entailed four design refreshes before 2006. Many other systems’ analyses, however, have concluded that scheduling zero refreshes is the most cost-effective plan. This means, for example, that the ideal approach could be to merely stock up before a part ceases production to cover the lifetime of the system. Usually it’s a combination of measures. The costs of requalification testing, buying, and storing spare parts and of performing the design refresh itself are all included in the tool’s life-cycle analyses.

In 2003, we looked at a radar system in the F-22 Raptor. Using 1998 data, we projected that the optimal time for a design refresh would be in 2004. Northrop Grumman then did an independent analysis and drew the same conclusion. This was a good proof of concept for our predictive abilities: MOCA could predict six years in advance what later turned out to be the best course of action.

While it’s nice to know ahead of time what you’ll need to do in the future, this kind of analysis is also a money-saving measure. A study done in 2005 on a Motorola radio-frequency base station communications system identified a design refresh plan that would cost about one-fourth of what it would cost to stock up on each part that might go obsolete during the lifetime of the base station. Motorola builds and maintains more than 100 000 systems for longer than 20 years.

Traditionally, the company addressed obsolescence events as they occurred, deciding on a case-by-case basis whether to perform a design refresh or order a lifetime supply of parts. This system management technique was in essence a “death by a thousand cuts” scenario, whereby valuable resources were directed to fund a continuous stream of independent decisions on how to manage parts. The company should have been looking instead at each part in the context of the product as a whole. The analysis concluded that Motorola’s best course of action would be to perform one design refresh in 2011 and buy sufficient parts to cover any obsolescence that occurs before then; doing no refreshes (and instead making only lifetime buys) would cost $33 million more. The key to a successful refresh schedule is deciding on it well in advance, so that a project’s budget can include that expense before irreplaceable parts become a serious business liability.

About the Author

Peter Sandborn is a University of Maryland professor of mechanical engineering. In “Trapped on Technology’s Trailing Edge”, he explores the dark side of Moore’s Law, where technological change translates into nightmares, not opportunities. “Every time I have to buy more memory for my PC to run a new version of software, the impact of technology obsolescence hits home,” Sandborn says.