This is part of IEEE Spectrum’s Special Report: 25 Microchips That Shook the World.

Contents

- 1 Gordon Moore

- 2 Vinod Khosla

- 3 Carver Mead

- 4 Steve Jurvetson

- 5 Sophie Vandebroek

- 6 Morris Chang

- 7 David Ditzel

- 8 Jeff Hawkins

- 9 Lee Felsenstein

- 10 Frederick P. Brooks, Jr.

- 11 Allen Baum

- 12 Nick Tredennick

- 13 Robert A. Pease

- 14 Gordon Bell

- 15 T.J. Rodgers

- 16 Robert Metcalfe

- 17 David Liddle

- 18 Sophie Wilson

- 19 Vinton Cerf

- 20 Ali Hajimiri

- 21 Charles G. Sodini

- 22 Hiromichi Fujisawa

- 23 James Meindl

- 24 Francine Berman

- 25 Nance Briscoe

Gordon Moore

Cofounder and chairman emeritus of Intel

Photo: Intel Corp.

There were lots of great chips, but one that will always be dear to me was the Intel 1103, the first commercial 1024-bit DRAM [introduced in 1970]. It was the chip that really got Intel over the hump to profitability. It was not the most elegant design, having many of the problems that memory engineers had become familiar with in core memories.

But this was comforting to such engineers—it meant that their expertise was not going to be made obsolete by the new technology. Even today, when I look at my digital watch and see 11:03, I cannot help but remember this key product in Intel’s history.

Photo: Khosla Ventures

Vinod Khosla

Cofounder of Sun Microsystems

and partner at Kleiner, Perkins, Caufield & Byers

The Motorola 68010 was my favorite chip. With its power and virtual memory support, it said to the world, “Microprocessors can stand and compete with the big boys—the minis and mainframes.”

Carver Mead

Professor of engineering and applied science, Caltech

Photo: Impinj

My favorite chip contained only a single transistor, although a remarkable one: a Shottky barrier gate field-effect transistor made from GaAs (later called the MESFET and now, using more advanced semiconductor structures, the HEMT).

I designed it over Thanksgiving break in 1965. The very high mobility of the III-IV materials, together with the absence of minority-carrier storage effects, made these devices far superior for a microwave power-output stage.

Despite my efforts to interest American companies, the Japanese were the first to develop these devices. They have made microwave communications in satellites, cellphones, and many other systems possible for many decades.

Steve Jurvetson

Managing director of Draper Fisher Jurvetson

Photo: Leino Ole

The Motorola 68000 was a special one. I built a speaking computer with it. I also wrote a multitasker in assembler. That chip was the workhorse of learning back during my M.S.E.E. days. But wait—other chips come to mind.

I also like ZettaCore’s first 1-megabyte molecular memory chip, D-Wave’s solid-state 128-qubit quantum computer processor, the Pentium (I’ve got an 8-inch wafer signed by Andy Grove in my office), the Canon EOS 5D 12.8-megapixel CMOS sensor, and the iTV 5-bit asynchronous processor running Forth and a custom OS—whew!

Sophie Vandebroek

Chief technology officer, Xerox

Photo: Xerox

My selection is Analog Devices’ iMEMS accelerometer, the first commercial chip to significantly integrate MEMS and logic circuitry. Commercialized in the early 1990s, it revolutionized the automotive air-bag industry—and saved lives!

Today this type of accelerometer is used in a variety of applications, including the Nintendo Wii and the Apple iPhone. Other types of MEMS chips are more and more present in a broad variety of applications.

Morris Chang

Founder of TSMC

Photo: TSMC

One of my favorite great microchips is the Intel 1103, 1-kilobit DRAM, circa 1970. Reasons: one, huge commercial success; two, started Intel on its way; three, demonstrated the power of MOS technology (versus bipolar); and four, opened up at least another 40 years of life for Moore’s Law.

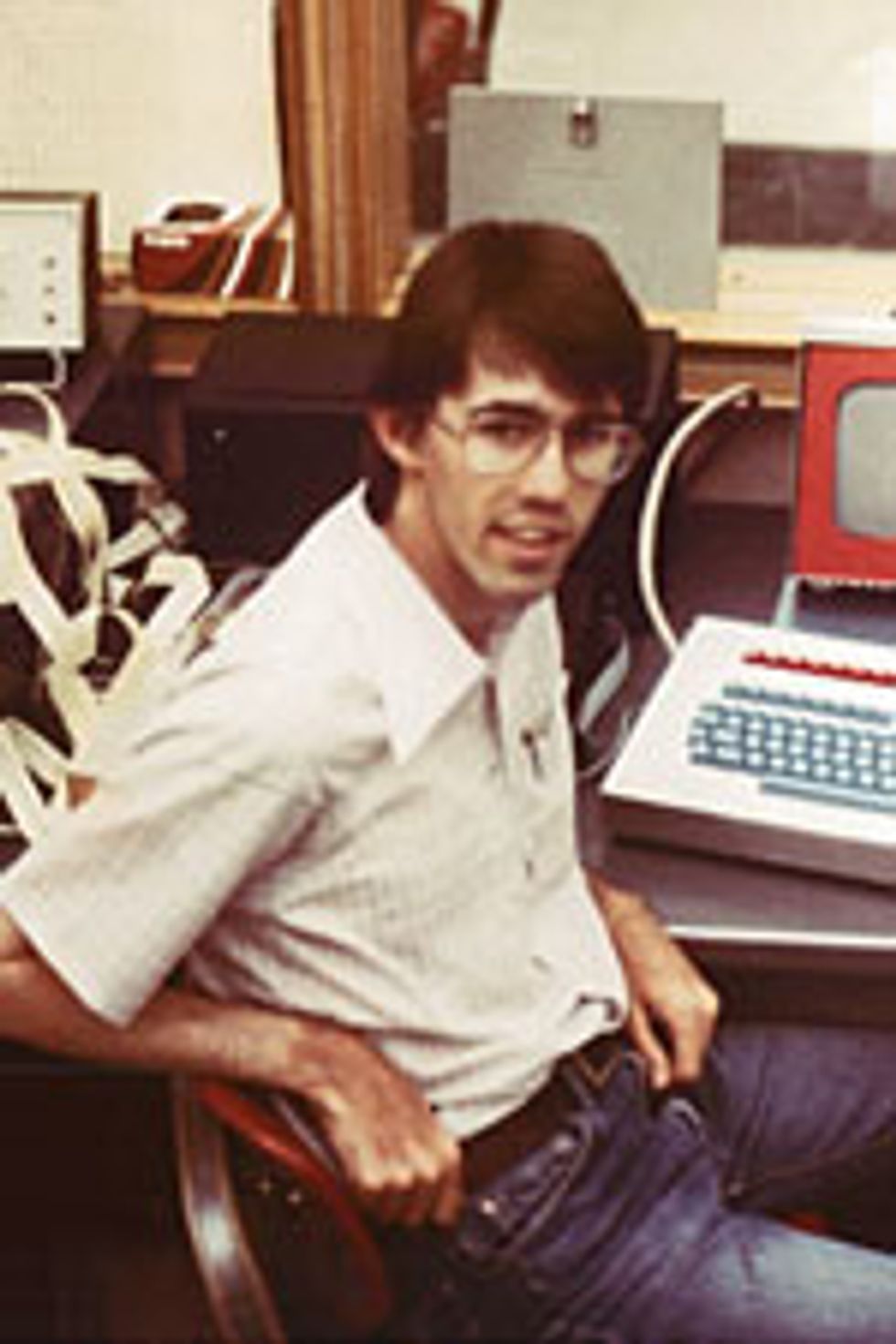

David Ditzel

Intel’s chief architect for hybrid parallel computing

Photo: David Ditzel

My favorite chip: the 6502 from MOS Technology. This 8-bit microprocessor was used by me and many hobbyists for building our own computers [above, me and my homemade PC in 1977]. I wrote a small operating system that fit in 4 kilobytes, and Tiny BASIC from Tom Pittman fit in 2 KB.

The reason it was a great chip was that in the 8-bit era, you could use the first 256 bytes of memory as 128 16-bit pointers to index from, making this machine much easier to program than other 8-bit alternatives. It was a big deal when you had to enter your programs in hexadecimal binary form.

Jeff Hawkins

Founder of Palm and Numenta

My personal favorite—a chip that opened my eyes to what was possible—was the Intel 2716 EPROM, vintage late 1970s. The 2716 was nonvolatile, held 2 kilobytes of memory, and, unlike many other chips, used a single 5-volt power supply, so it could be used as storage in a small and practical package.

Of course, its big disadvantage was that you needed a UV light to erase it. But with a little imagination you could see that one day it could all be done electrically. In some sense the 2716 is the great-grandparent of today’s flash memory chips.

Lee Felsenstein

Computer pioneer

Photo: Lee Felsenstein

The humble, ubiquitous, and quite inexpensive Signetics 555 timer had a big impact on my career, when I found myself using it to craft various pulse-sequencing circuits, baud-rate oscillators, and ramp generators for my earliest consulting clients.

With a latch triggered by two comparators, a high-current-drive output and a separate open-collector output for discharging capacitors, the 555 offered a wide range of uses with the addition of a few analog components.

It suggested that it could provide more than it delivered at times, which helped me refine my understanding of the limits of a chip and the need to consider its operation in the analog domain.

Photo: Jerry Markatos

Frederick P. Brooks, Jr.

Professor of computer science at the University of North Carolina at Chapel Hill

The original ARM chip is at the top of my list. There are today more ARM computers than all others put together, because of their use in cellular phones. They are all descendants of that original ARM chip.

Photo: Donya White

Allen Baum

Platform architect at Intel

If I had to pick the top of the heap, I think it would have to be the 74163 Synchronous 4-bit Preloadable counter. I used this chip [a TTL, or transistor-transistor logic, integrated circuit created by Texas Instruments] in a lot of different designs. It could be used to count, to shift, and it could be expanded.

Using it taught me a lot about how incrementers worked and how lookahead carry worked. I designed clocks, frequency and event counters with them, used them as state machine controllers, used them in a homework assignment to design a machine that played boogie-woogie bass lines—and of course, Woz used them in his blue boxes!

Photo: TPL Group

Nick Tredennick

Technology analyst for Gilder Publishing

One of the greatest chips of all time was the AL1, an 8-bit microprocessor designed by Lee Boysel at Four Phase Systems. That chip was the real pioneer in microprocessor design. It was in systems delivered to the field at least by 1969, and I still remember a description of the chip that appeared in Computer Design in April 1969.

In my opinion, this was the first microprocessor in a commercial design and possibly the first real microprocessor. Motorola bought Four Phase, and the company and its products soon disappeared, enabling surviving companies to rewrite the history of the microprocessor. The AL1 is the ultimate in long-forgotten specimens.

Photo: National Semiconductor

Robert A. Pease

Staff scientist at National Semiconductor

One of my favorite chips was Robert J. Widlar’s LM10. It was the first op-amp in the world to have rail-to-rail output swing. Also, it had a 200-millivolt band-gap reference built in, so it could put out 200 to 39 000 mV of stable reference. (It took a couple of dozen years before anybody else made such a wide range of Vref.)

Also, this IC could run from plus or minus 0.6 up to plus or minus 20 V of power. Unique. It is still made and sold 30 plus years later. Unique capabilities. The LM10 has helped me make many useful circuits.

Gordon Bell

Computer pioneer, researcher at Microsoft

As a computer guy, I tend to not see the chip but rather the complete system that the chip enables. So every new density or technology enables a unique system or system capability. I have been wowed over time.

I can remember in the early days that just getting enough transistors to have a complete UART [universal asynchronous receiver/transmitter], was a landmark.

As a computer historian, I think that the 4004 system announcement was a vision of where the world would go.

The 2901 [a bit-slice processor by AMD] enabled lower-cost minicomputers.

All of the chips that enabled one-chip minicomputers LSI-11 through VAX and Alpha were meaningful to me.

Now, more recently, I’d say I like these two complete systems on a chip: The Dust Networks wireless sensor network mesh chip with microprocessor, program memory, radio, and sensors that use 900-megahertz and 5.3-gigahertz bands (there’s a mini datasheet on the Web: http://dustnetworks.com/cms/sites/default/files/DN2510.pdf), and the G2 Microsystems Wi-Fi chips—you can use their Wi-Fi IP addresses to do all kinds of location applications like tracking stuff or connecting peripherals and appliances.

T.J. Rodgers

Founder and CEO of Cypress Semiconductor Corp.

Photo: Cypress Semiconductor Corp.

One of my favorite chips of all time is the Cypress Semiconductor CY8C21x34. Like other PSoC [programmable system-on-chip] devices, it combines a microcontroller with flash memory, programmable analog and digital blocks, and other subsystems.

It lets customers create software-based custom chips in hours rather than weeks or months. It has been designed into Italian coffeemakers, high-end cellphones, set-top box platforms, electric toothbrushes, air cleaners, high-definition televisions, digital cameras, remote-control hobbyist helicopters, printers, treadmills, automobile sound systems, medical equipment, motorized baby strollers, and ”intelligent” cushioning-changing running shoes.

Photo: Polaris Venture Partners

Robert Metcalfe

General partner, Polaris Venture Partners

Favorite chip: SEEQ’s Ethernet chip (the first), circa 1982, allowed 3Com to ship the first PC Ethernet, for the IBM PC in September 1982.

David Liddle

Partner at U.S. Venture Partners

Photo: US Venture Partners

My choice is the National Semiconductor LM709 Operational Amplifier. Forty years ago, it suddenly made subtle analog design accessible to a much wider audience. Its low cost, low noise, durable design, and high performance really changed the playing field, after the pioneering Fairchild 741 defined the category. The untimely early death of its brilliant designer, Robert Widlar, is a whole story in itself.

Sophie Wilson

Codesigner of the ARM processor

Photo: Alan Wilson

My favorite chips: MOS Technologies 6502 processor, NE555 timer, µA741 op-amp, and the entire RCA CMOS logic family—I still have the original 1973 data book. Way more important than TTL. That book got me my first job with ICI Fibres Research and my second job with Hermann Hauser [cofounder of Acorn Computers]. It was how logic would be built in the future.

Photo: Google

Vinton Cerf

Vice president and chief Internet evangelist for Google

The chips that intrigue me the most are the multicore processors now being delivered by Intel and Advanced Micro Devices and others. The reason these are so interesting is that they pose fascinating problems for compilers looking to parallelize serial programs, they raise issues about input/output rates to and from the chip (how quickly data and instructions can flow to and from the cores), and they raise interesting questions about non–Von Neumann architectures.

Ali Hajimiri

Professor of electrical engineering, Caltech

My favorite chip of all time has been the distributed active transformer (DAT) [used in CMOS power amplifiers for wireless systems]. It resulted in a performance improvement of almost two orders of magnitude over the state of the art. It’s truly novel and elegant—and it looks fabulous!

Charles G. Sodini

MIT professor

Photo: Massachusetts Institute of Technology

My favorite: Mostek MK4096 4K DRAM. It featured multiplexed addresses, invented by Bob Proebsting, which permitted space-saving 16-pin packaging.

When it was introduced, the industry seemed uncertain as to which packaging would become standard. The trade press called that pin-out design “the maverick 4K package.” Eventually, the Mostek pin configuration did become the industry standard, not only for 4K DRAMs but also for the many generations of DRAMs.

Hiromichi Fujisawa

Corporate chief scientist at Hitachi

Photo: Hitachi

I’ve been more of a software person rather than a hardware person. But I did some experimentation with the AMD Am2900 bit-slice microprocessors. This work involved designing a special processing system based on the Am2900 family to do Kanji OCR (optical character recognition). It was a neat system. And it was 30 years ago!

James Meindl

Director, Microelectronics Research Center at the Georgia Institute of Technology

Photo: Georgia Institute of Technology

The chip that I consider my personal favorite was a 6-by-24 array of bipolar phototransistors designed to image a single printed character using a thumb-size camera. A blind reader would use the camera to scan a line of print while his or her index finger made contact with an array of 6 by 24 vibrating piezoelectric stimulators that presented a tactile image of each printed character. Tens of thousands of these Optacon optical-to-tactile reading aids for the blind were provided to blind users worldwide from about 1970 to 2000.

While at Stanford University, I presented a technical paper describing the Optacon at the 1969 IEEE International Solid-State Circuits Conference, and a live on-stage demonstration was given by then Miss Candace Linviill, now Mrs. Candace Berg, who read at a speed of about 75 words per minute. She received a standing ovation, and the paper subsequently received an outstanding paper award from the ISSCC.

Francine Berman

Director, San Diego Supercomputer Center

Photo: UC San Diego

It might be surprising that a supercomputer center director is picking this family of chips, but I think the ARM contributions have been tremendously important. Chips in the ARM family are used in games, iPods, and cellphones, and personal digital devices have created a paradigm shift in the way we live, play, and work.

With the now-ubiquitous use of these small-scale devices, location largely doesn’t matter for many of our activities, creating unprecedented flexibility and the ability to access information anywhere at any time. The low-power focus of the ARM design as well predates the time when power became an important part of the design of nearly all microchips.

Nance Briscoe

Associate curator, information technology and communications, National Museum of American History, Smithsonian Institution

Photo: Olan Mills

One of my favorite chips is the Intel i860, a 64-bit RISC [reduced-instruction-set computer] microprocessor introduced in 1989. It was used in two massively parallel supercomputers, the Touchstone Delta and the Paragon, which helped pave the way to teraflops levels of computing power based on CCOTS (commercial commodity off-the-shelf) technology.

The Touchstone Delta—part of the Touchstone Program cosponsored by Intel and the Defense Advanced Research Projects Agency—had 512 i860 microprocessors. It successively attained record speeds of 4.0 gigaflops, 8.6 gigaflops, and 13.9 gigaflops, taking the world’s fastest computer title at different points in time. It was used for a wide spectrum of research, including climate, aerospace, and biological simulations.

Intel’s Paragon supercomputer was the commercial offspring of the Delta. It set two speed records—first in 1994, using 3680 i860s, when it attained 143 gigaflops, and then again in late 1994, when Intel and Sandia National Laboratories built a larger Paragon system—with 6780 i860s—achieving 281 gigaflops. The Paragon was used to simulate the Shoemaker-Levy comet collision with Jupiter, and the results were incredibly accurate. The Delta and Paragon systems drove the supercomputer industry as a whole for years.

Some recent top supercomputers, including Sandia’s ASCI, which broke the teraflops barrier in 1996, use technology that can be traced back to those pioneering systems—and thus to the i860! At the National Museum of American History’s Chip Collection, we have the i860 original 1989 specification.