Excuse me a moment—I am going to be bombastic, overexcited, and possibly annoying. The race is run, and we have a winner in the future of quantum computing. IBM, Google, and everyone else can turn in their quantum computing cards and take up knitting.

OK, the situation isn’t that cut and dried yet, but a recent paper has described a fully programmable chip-based optical quantum computer. That idea presses all my buttons, and until someone restarts me, I will talk of nothing else.

Love the light

Contents

There is no question that quantum computing has come a long way in 20 years. Two decades ago, optical quantum technology looked to be the way forward. Storing information in a photon’s quantum states (as an optical qubit) was easy. Manipulating those states with standard optical elements was also easy, and measuring the outcome was relatively trivial. Quantum computing was just a new application of existing quantum experiments, and those experiments had shown the ease of use of the systems and gave optical technologies the early advantage.

But one key to quantum computing (or any computation, really) is the ability to change a qubit’s state depending on the state of another qubit. This turned out to be doable but cumbersome in optical quantum computing. Typically, a two- (or more) qubit operation is a nonlinear operation, and optical nonlinear processes are very inefficient. Linear two-qubit operations are possible, but they are probabilistic, so you need to repeat your calculation many times to be sure you know which answer is correct.

A second critical feature is programmability. It is not desirable to have to create a new computer for every computation you wish to perform. Here, optical quantum computers really seemed to fall down. An optical quantum computer could be easy to set up and measure, or it could be programmable—but not both.

In the meantime, private companies bet on being able to overcome the challenges faced by superconducting transmon qubits and trapped ion qubits. In the first case, engineers could make use of all their experience from printed circuit board layout and radio-frequency engineering to scale the number and quality of the qubits. In the second, engineers banked on being able to scale the number of qubits, already knowing that the qubits were high-quality and long-lived.

Optical quantum computers seemed doomed.

Future’s so bright

So, what has changed to suddenly make optical quantum computers viable? The last decade has seen a number of developments. One is the appearance of detectors that can resolve the number of photons they receive. All the original work relied on single-photon detectors, which could detect light/not light. It was up to you to ensure that what you were detecting was a single photon and not a whole stream of them.

Because single-photon detectors can’t distinguish between one, two, three, or more photons, quantum computers were limited to single-photon states. Complicated computations would require many single photons that all need to be controlled, set, and read. As the number of operations goes up, the chance of success goes down dramatically. Thus, the same computation would have to be run many many times before you could be sure of the right answer.

By using photon-number-resolving detectors, scientists are no longer limited to states encoded in a single photon. Now, they can make use of states that make use of the photon number. In other words, a single qubit can be in a superposition state of containing a different number of photons zero, one, two and so on, up to some maximum number. Hence, fewer qubits can be used for a computation.

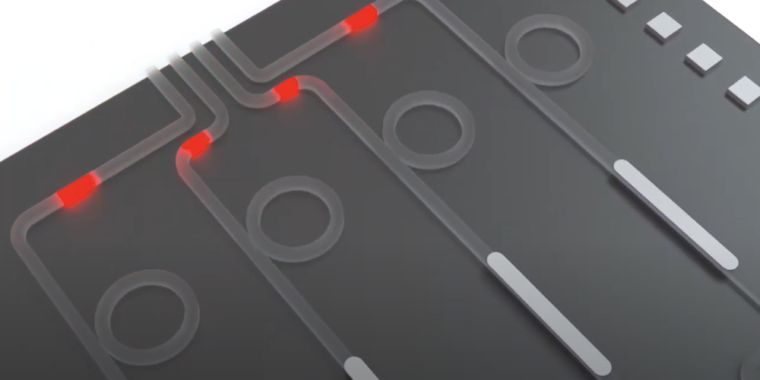

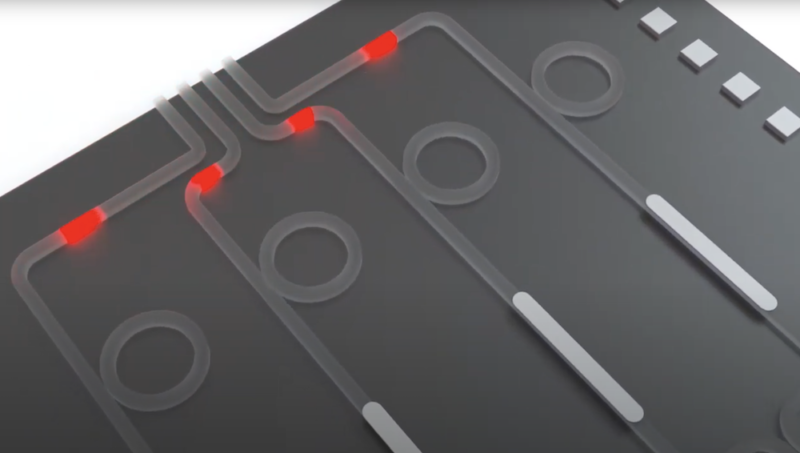

A second key development was integrated optical circuits. Integrated optics have been around for a while, but they have not exactly had the precision and reliability of their electronic counterparts. That has changed. As engineers got more experience in working with the fabrication techniques and with the design requirements for optical circuits, performance has gotten much, much better. Integrated optics are now commonly used in telecommunications industry, with the scale and reliability that that implies.

As a result of these developments, the researchers were simply able to design and order their quantum optical chip from a fab, something unthinkable less than a decade ago. So, in a sense, this is a story that is 20 years in the making of the underlying technology.

Putting the puzzle together

The researchers, from a startup called Xanadu and the National Institute of Standards, have pulled together these technology developments to produce a single integrated optical chip that generates eight qubits. Calculations are performed by passing the photons through a complex circuit made up of Mach-Zehnder interferometers. In the circuit, each qubit interferes with itself and some of the other qubits at each interferometer.

As each qubit exits an interferometer, the direction it takes is determined by the its state and the internal setting of the interferometer. The direction it takes will determine which interferometer it moves to next and, ultimately, where it exits the device.

The internal setting of the interferometer is the knob that the programmer uses to control the computation. In practice, the knob just changes the temperature of individual waveguide segments. But the programmer doesn’t have to worry about these details. Instead, they have an application programming interface (Strawberry Fields Python Library) that takes very normal-looking Python code. This code is then translated by a control system that maintains the correct temperature differentials on the chip.

The company’s description of its technology.

To demonstrate that their chip was flexible, the researchers performed a series of different calculations. The first calculation basically let the computer simulate itself—how many different states can we generate in a given time. (This is the sort of calculation that causes me to grind my teeth because any quantum device can efficiently calculate itself.) However, after that, the researchers got down to business. They calculated the vibrational states of ethylene—two carbon atoms and two hydrogen atoms—and the more complicated phenylvinylacetylene—the favorite child’s name for 2021—successfully. These carefully chosen examples fit beautifully within the eight-qubit space of the quantum computer.

The third computation involved computing graph similarity. I must admit to not understanding graph similarity, but I think it is a pattern-matching exercise, like facial recognition. These graphs were, of course, quite simple, but again, the machine performed well. According to the authors, this was the first such demonstration of graph similarity on a quantum computer.

Is it really done and dusted?

All right, as I warned you, my introduction was exaggerated. However, this is a big step. There are no large barriers to scaling this same computer to a bigger number of qubits. The researchers will have to reduce photon losses in their waveguides, and they will have to reduce the amount of leakage from the laser that drives everything (currently it leaks some light into the computation circuit, which is very undesirable). The thermal management will also have to be scaled. But, unlike previous examples of optical quantum computers, none of these are “new technology goes here” barriers.

What is more, the scaling does not present huge amounts of increased complexity. In superconducting qubits, each qubit is a current loop in a magnetic field. Each qubit generates a field that talks to all the other qubits all the time. Engineers have to take a great deal of trouble to decouple and couple qubits from each other at the right moment. The larger the system, the trickier that task becomes. Ion qubit computers face an analogous problem in their trap modes. There isn’t really an analogous problem in optical systems, and that is their key advantage.

Nature, 2020, DOI: 10.1038/s41586-021-03202-1(About DOIs)