It is a remarkable historical fact that Bell Labs did not invent the microchip, despite having developed almost all the underlying technology that went into it. This puzzling failure can be attributed partly to market forces—or the lack of them. As former Bell Labs President Ian Ross once explained in an interview, the Labs focused on developing robust, discrete devices that would enjoy 40-year lifetimes in the Bell System, not integrated circuits. Indeed, the main customers for microchips were military procurement officers, who, especially after Sputnik, were willing to cough up more than US $100 a chip for this ultralightweight circuitry. But the telephone company had little need for such exotica. “The weight of the central switching stations was not a big concern at AT&T,” quipped Ross, who back in 1956 had himself fashioned a precursor of the microchip.

Ultimately, though, the company would need integrated circuits. Think of the Bell System as the world’s largest computer, with both analog and digital functions. Its central offices put truly prodigious demands on memory and processing power, both of which could be best supplied by microchips. And it was microchips driven by software that eventually made electronic switching a real success in the 1970s. But by then AT&T was playing an increasingly desperate catch-up game in this crucial technology.

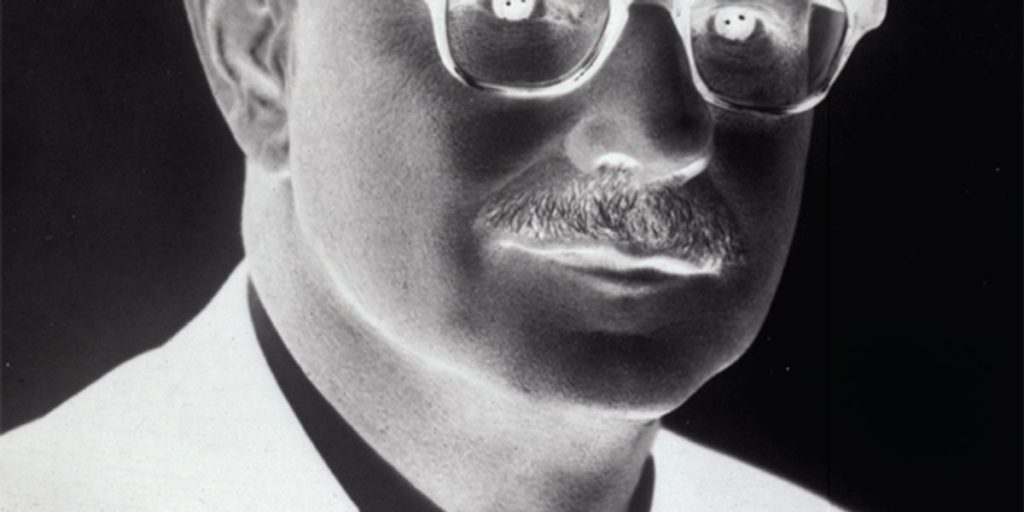

Here Morton was partly to blame. He pooh-poohed the potential of microchips and large-scale integration. Citing his own version of the “tyranny of numbers,” he initially argued that the manufacturing yields on integrated circuits would become unacceptably low as the number of components on a chip grew. Even though each chip component—typically a transistor—might be made with a 99 percent success rate, this number would have to be multiplied by itself many times, resulting in abominable yields, he reasoned. Tanenbaum summed up Morton’s attitude this way: “The more eggs you put in the chip basket, the more likely it is that you have a bad one.”

And reliability would suffer, too, or so Morton thought. Due to his lofty position—he had become a vice president in 1958—this argument dominated the thinking at Bell Labs in the early 1960s. “Morton was such a strong, intimidating leader,” observes Eugene Gordon, who worked for him then, “that he could make incorrect decisions and remain unchallenged because of his aggressive style.” Morton’s previous string of successes probably contributed to his sense of his own infallibility.

But his tyranny hypothesis ultimately didn’t hold up. Failure rates of microchip components are an average over the entire surface of a silicon wafer. Each wafer can have unusually bad regions that pull the average down significantly, while chips in the better regions have much higher success rates, leading overall to acceptable yields. It took outsiders from the Sun Belt—at Fairchild and Texas Instruments—to overthrow the tyranny and pioneer microchip manufacturing.

Well into the 1960s, Morton continued to drag his feet on silicon-based chip technology, despite mounting evidence of its promise. He did not consider it a sufficiently “adaptive” technology, by which he meant something that could easily respond to the evolving needs of the Bell System and gradually incorporate innovative new materials and techniques as they became available. The phone company couldn’t use a technology that was too disruptive, because the systems engineers at AT&T always had to ensure extreme reliability, compatibility with existing subsystems, and continuity of telephone service. “Innovation in such a system,” Morton declared, “is like getting a heart transplant while running a 4-minute mile!”

To the dismay of Gordon and others in his division, Morton squelched efforts at Bell Labs to pursue what the semiconductor industry began calling large-scale integration, or LSI, which yielded single silicon chips containing more than 1000 components. He even derided people working on LSI as “large-scale idiots,” said one colleague. Instead, he promoted the idea of hybrid technology incorporating smaller-scale microchips, which could be manufactured with higher yields, into “thin-film” circuits based on metals such as tantalum, in which resistors and capacitors could be etched more precisely than was possible in silicon. Morton championed this approach as the “right scale of integration,” or RSI—another favorite phrase of his.

It proved to be a bad decision, but Morton was adamant. Tanenbaum reckons that it cost AT&T two or three years’ delay in getting microchips into the Bell System for later versions of electronic switching. Even then, the phone company had to purchase most of those chips from other companies instead of making them at Western Electric. Buying components from outsiders was something AT&T had tried to avoid before 1968 (when forced to by a landmark decision by the Federal Communications Commission), because that made it more difficult to control their operating characteristics and reliability.

Bell Labs’ focus on robust discrete devices, almost to the exclusion of microchips, started to dissolve in the late 1960s. Engineers at Murray Hill and Allentown began working again on the metal-oxide semiconductor (or MOS) field-effect transistor, which Bell Labs had pioneered in the 1950s and then ignored for half a decade—even as companies like RCA, Fairchild, and others ran with it. In a MOS field-effect transistor, current flows through a narrow channel just under the oxide surface layer, modulated by the voltage on a metal strip above it. As the number of components per microchip swelled, the simple geometry and operation of the MOS transistor made it a better option than the junction transistor.

But Fairchild engineers had already solved most of the challenging reliability problems of MOS technology in the mid-1960s, so that company enjoyed a big technological advantage as the devices began finding their way into semiconductor memories.

Once again, it had been Morton’s decision back in 1961 not to pursue the development of MOS devices, in part because they initially exhibited poor reliability and didn’t work at high frequencies. As Anderson recalled, “Morton, who was ever alert to spot a technology loser as well as a winner, was thoroughly convinced of the inherent unreliability of surface devices, as well as…that field-effect devices would be limited to low frequencies.” In the early 1960s, they indeed made little sense for a company already heavily committed to electronic switching based on discrete devices. But when Bell Labs and AT&T began embracing MOS transistors later that decade, they were once again playing catch-up [see “The End of AT&T,” Spectrum, July 2005].

His dim view of microchips didn’t prevent Morton from being showered with accolades from the mid-1960s onward. In 1965, he received the prestigious David Sarnoff Medal of the IEEE for “outstanding leadership and contributions to the development and understanding of solid-state electron devices.” Two years later, he was among the first people to be inducted into the U.S. National Academy of Engineering. In 1971, Morton published an insightful book, Organizing for Innovation, which espoused his “ecological,” systems approach to managing a high-tech R&D enterprise like Bell Labs. In it, he expounded at length on his ideas about adaptive technology and the right scale of integration. Morton was also in demand as a keynote speaker at industry meetings and as a consultant—especially to emerging Japanese electronics and semiconductor companies, where his word was revered.

But there was a dark side to Morton’s personality that few of his Bell Labs colleagues ever glimpsed at work. He had a serious drinking problem, probably exacerbated by his frustration at his stagnation within the Bell Labs hierarchy. Sharing drinks with Gordon one evening, Morton confided his disappointment that he was still only a vice president after more than a dozen years at that level. Ambitious and aggressive, he yearned for the role of chief executive. Morton also had difficulties at home, and he began spending more evenings at the Neshanic Inn, a local hangout about a mile from where he lived.

Sparks vividly remembers how he was playing golf with Bell Labs president James Fisk that balmy Saturday morning in December 1971 when an anxious messenger rushed out onto the course to give them the tragic news of Morton’s death. Ashen-faced, Fisk asked Sparks to check into what had happened. Sparks went to the hospital where the autopsy was being performed. The doctor told Sparks that Morton’s lungs were singed, indicating he was still alive and breathing when the fire was ignited.

Details of what happened that fateful night came out at the murder trials of the two men, Henry Molka and Freddie Cisson, which occurred the following fall at the Somerset County Courthouse, in Somerville, N.J. According to prosecutor Leonard Arnold, Morton had just returned from a business trip to Europe and was driving back from the airport when he decided to stop by the inn for a drink. But it was nearly closing time, and the bartender refused to serve him. Molka and Cisson told Morton they had a bottle in their car and offered to pour him a drink. They walked out with him to the parking lot and mugged him there, pocketing all of $30.

Gordon figures they thought Morton an easy mark, a well-dressed man in his late 50s with a showy gold watch. But they were mistaken. Morton kept himself in good physical condition and, given his aggressive disposition, probably fought back. A violent struggle must have ensued. After knocking him unconscious, Molka and Cisson threw him in the back seat of his Volvo, drove it a block down the road, and set it on fire with gasoline they extracted from its fuel-injection system. The two men were convicted of first-degree murder and sentenced to life imprisonment, but according to Arnold, they served only 18 years.

Sadly, the world had lost one of the leading proponents of semiconductor technology, the articulate, visionary engineer who turned promising science into the extremely useful, reliable products that were already revolutionizing modern life by the time of his death. Under Morton’s leadership as head of electronics technology at Bell Labs, many other innovative devices were invented that today are ubiquitous in everyday life, including flash memory and the charge-coupled device, both derived from MOS technology. But like the microchip and the MOS transistor, they would be developed and marketed by other companies.

“Jack just loved new ideas,” said Willard Boyle, one of the CCD’s inventors. “That’s what fascinated him, where he got his kicks.” That attitude is probably an important part of the reason that Bell Labs served as such a fount of innovative technologies under his stewardship. But AT&T could realistically pursue only a fraction of these intriguing possibilities, so the Labs focused mainly on the discrete devices and circuits that Morton and other managers considered useful in implementing their immediate, pressing goal of electronic switching. Viewed in that context, the decision to pass on yet another revolutionary, but unproven, technology made good business sense—at least in the short run.

Thus, another, more subtle tyranny of numbers was at work here. Given the seemingly infinite paths that AT&T could follow—and the legal constraints on what it could actually make and sell—it was probably inevitable that outsiders would eventually bring these disruptive new technologies to the masses.

About the Author

Contributing Editor MICHAEL RIORDAN is coauthor of Crystal Fire: The Birth of the Information Age. He teaches the history of physics and technology at Stanford University and the University of California, Santa Cruz.

To Probe Further

An obituary of Jack Morton, written by Morgan Sparks for the National Academy of Engineering, is available on the Web at http://darwin.nap.edu/books/0309028892/html/223.html.

Chapters 9 and 10 of Michael Riordan and Lillian Hoddeson’s Crystal Fire (W.W. Norton, 1997) discuss the invention and development of the junction transistor at Bell Labs. A tutorial on the device, ”Transistors 101: The Junction Transistor,” appeared in the May 2004 issue of IEEE Spectrum.

Jack Morton’s Organizing for Innovation: A Systems Approach to Technical Management (McGraw-Hill, 1971) fleshes out his management philosophy.

Chapter 1 of Ross Knox Bassett’s To the Digital Age: Research Labs, Start-up Companies, and the Rise of MOS Technology (Johns Hopkins University Press, 2002) contains the definitive history of the MOS transistor.